Sampling

Sampling is the process of selecting subjects for a study. Generally, the subjects are the specific people studied in an experiment or surveyed. The sample is chosen out of a larger population.

Why sample? The population you are studying is too large to study, so you have to study just a part of that population (a sample).

What could be some problems with sampling (examples of poor sampling)? Bias sample?

To reduce the problems of poor sampling, you want to use random sampling when you can. In random sampling all members of a population have an equal chance of getting into sample.

------------

What are internal and external validity?

With this type validity we are looking at the validity of the overall study, not just the validity of the instruments being used to measure the variables.

You are asking the question: Is it a valid study? Not: Is it a valid instrument?

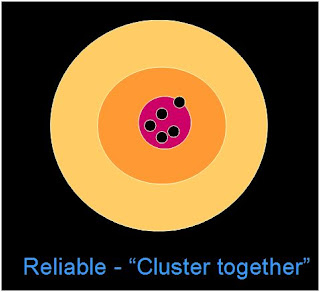

Internal validity: Are the conclusions to be trusted for the particular study? Or, are the results valid for the subjects in your sample. For a visual representation just look the orange circle at the top. The black dots inside the orange circle are the subjects in the sample.

External validity: To whom do the conclusions apply? Generalizability of findings. The results, can they be generalized to the larger populations For a visual representation see the orange circle within the pinkish-purple circle. The orange circle represents the sample and pinkish-purple circle represents the larger population.

Question: Could you have very poor internal validity, but good external validity?

--------

If something goes wrong in a study, who can you blame it on? That is, if the study is not getting valid results, who can you blame it on? And you can't blame it on the alcohol. :)

What are some threats to a study’s internal validity? Or, put another way, where can you put the blame?

- Threats due to researcher (e.g., influence results).

- Threats due to how research is conducted (e.g., inaccurate, inconsistent research, poorly designed survey)

- Threats due to research subjects

- Hawthorne effect

- mortality - losing people from a study (due to death, etc.)

- maturation - internal change explains behavior. In studies done over a period of time the subjects may change.

See any possible threats to internal validity?

What are some threats to a study’s external validity?

- Research procedures don’t reflect everyday life

- ecological validity

- Different finding, same sample

- replication is important

- Poor sampling

Any problems with studies done at universities?

Generalizability problem?

Share this post with others. See the Twitter, Facebook and other buttons below.

Please follow, add, friend or subscribe to help support this blog.

See more about me at my web site WilliamHartPhD.com.